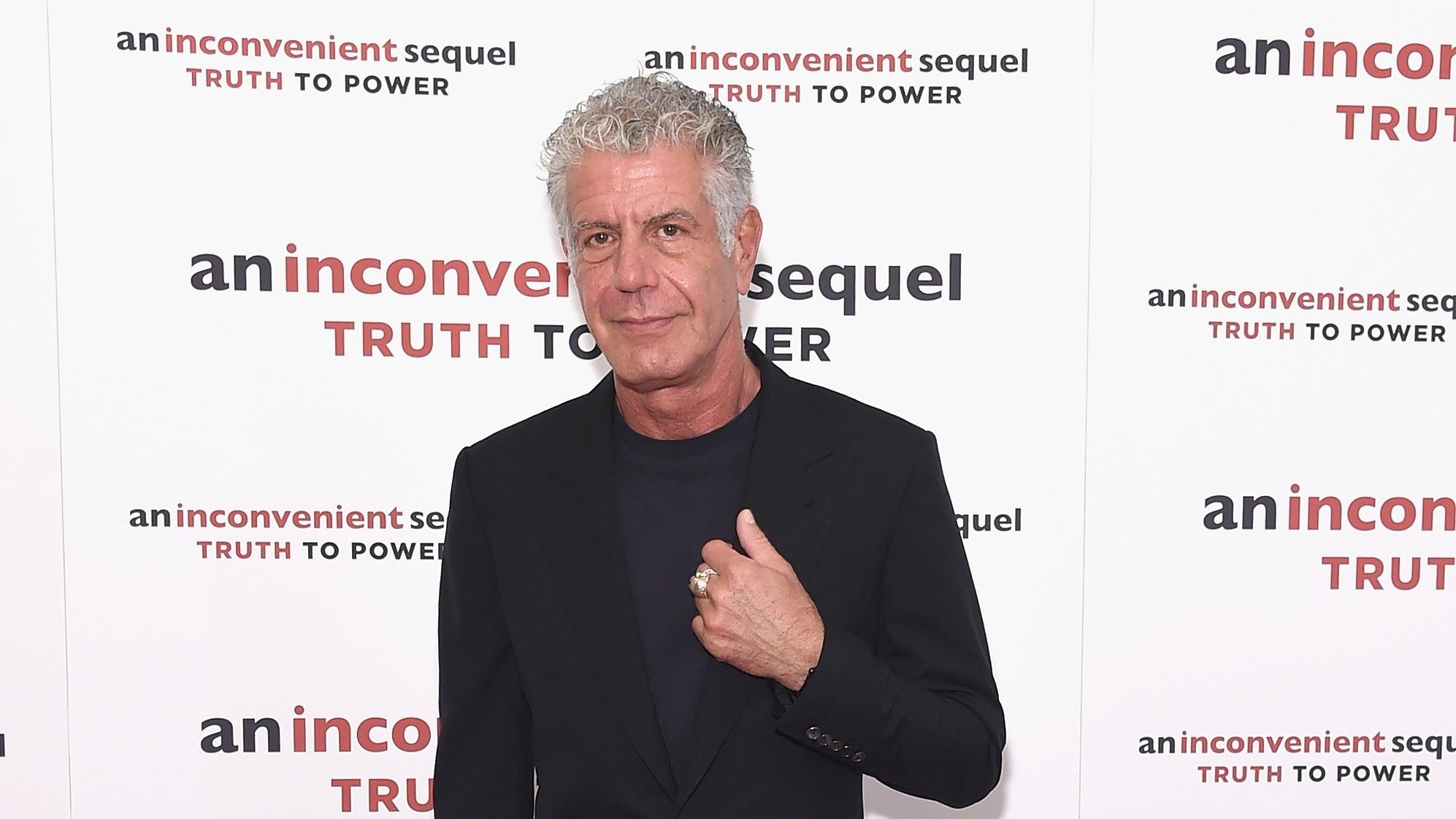

Anthony Bourdain documentary Roadrunner features a surprise AI recreation of his voice

Director Morgan Neville won't say which quotes in the film aren't really Bourdain's voice

Film News Anthony Bourdain

Director Morgan Neville, whose previously films include Won’t You Be My Neighbor?, frames his new Anthony Bourdain documentary Roadrunner around clips of Bourdain’s own voice, allowing him to narrate the story of his own life, but a New Yorker piece on the film contains a surprising revelation from Neville: not all of the clips were made before Bourdain’s death. For three specific moments in the film, Neville wanted to use Bourdain quotes that he didn’t say out loud but wrote in emails or whatever, so Neville turned to “a software company” and used “about a dozen hours of recordings” to construct an AI recreation of Bourdain’s voice—and it’s not necessarily obvious in the film that some of the Bourdain clips weren’t really made by him, even if it’s clear that some of his narration was stitched together from other sources.

It’s not quite as horrific and inconceivable as Kanye West paying to make a hologram of Kim Kardashian’s dead father that talks about how great Kanye West is, but it’s certainly unusual. And a bit creepy. And particularly bizarre, given how Roadrunner positions Bourdain as someone who was so uncomfortable with fame that he probably wouldn’t have been thrilled with having his voice used to say things he never actually said out loud when he was alive. That also bumps up against the fact that Bourdain died by suicide, so any discussion of what he would’ve done or would’ve wanted is all that much more prickly, which may be partially why Roadrunner doesn’t underline the fact that some of its quotes aren’t things Bourdain literally said out loud.

Neville seems to acknowledge how unusual it is in the New Yorker interview, saying “we can have a documentary-ethics panel about it later,” but he pointedly refuses to say which lines in the movie are actual Bourdain recordings and which ones were readings performed by this AI (other than one example that’s explained in the New Yorker piece). There’s also a moment in the interview where Neville touches on another potential sticking point in the film, which is its lack of focus on Bourdain’s relationship with Asia Argento—who is not interviewed and was not approached about potentially being interviewed—before his death. Neville suggests that the last year of Bourdain’s life is like “narrative quicksand” in that anyone he talked to would have their own things to focus on and “none of those things actually bring you closer to understanding Tony.”

If you or someone you know is contemplating suicide, please call the National Suicide Prevention Lifeline at 1-800-273-8255.

43 Comments

personally i think this is unethical and has tainted the movie for me. pity, i was really looking forward to it.

Yeah it moved the film from something I would pay to see to something I am gonna pirate to watch. Its not right and the director response of “we can have a documentary-ethics panel about it later,” is really shittyEspecially because you know Bourdain himself would have hated it

i’m sure i’ll watch it and enjoy it enough still, but it’s gonna be gnawing at me the whole time.

Yeah, if he needs to ask a documentary-ethics panel whether it’s OK to deliberately mislead his audience, he’s got no business making “documentaries”.

Someone might need to explain to Neville how ethics committees work.

I’m 100% not watching it now. It’s not just unforgivable in general, it’s particularly ridiculous and disgusting given who Bourdain was.

They really did not need to have “him” reading that email to give it greater emotional impact and it honestly seems like a facile choice by the director, which also just makes me wonder about other choices likely to have been made and nah.

For real. This is gross and deeply disrespectful. And the piece also says“Throughout the film, Neville and his team used stitched-together clips of Bourdain’s narration pulled from TV, radio, podcasts, and audiobooks.”So it sounds to me like there are three instances of AI Bourdain and a whole bunch of other spots where there are Frankenstein clips of him saying things that he didn’t actually say.

No, it’s literally describing the process of editing together pre-existing footage and media, i.e. how any movie like this gets made.

I think y’all are forgetting how sensationalized the AVClub gets in reporting on these sorts of things and are buying the snake oil. Other than the AI vocals, pretty sure you won’t find anything you wouldn’t find, well, in Won’t You Be My Neighbor.

A – That quote I pointed out is from the New Yorker piece, not concocted by the AVClub.B – It could be describing normal editing, but I’m throwing out benefit of the doubt here because the filmmaker decided that using AI recreations was fine.

Not defending the use of the AI “reading” here, but as a documentary filmmaker, we use stitched together clips, audio and video, all the time, to create a narrative. It happens in print journalism, too. The quotes you see aren’t always one continuous thought, but oftentimes the best pieces culled from a long interview. I agree that filling in the audio gaps with uncredited AI recreations is ethically iffy, but “Frankensteining” is literally how you edit any piece of narrative storytelling, especially non-fiction works with a lot of different sources.

Same here. I just don’t want to watch it anymore.

Gross.

This seems like an awfully slippery slope. I’m reminded of that video going around with Hitler and Stalin singing “Video killed the radio star”.

not all of the clips were made before Bourdain’s death. For three

specific moments in the film, Neville wanted to use Bourdain quotes that

he didn’t say out loud but wrote in emails or whatever, so Neville

turned to “a software company” and used “about a dozen hours of

recordings” to construct an AI recreation of Bourdain’s voice

Oh. Okay. This is terrible.

Neville suggests that the last year of Bourdain’s life is like

“narrative quicksand” in that anyone he talked to would have their own

things to focus on and “none of those things actually bring you closer

to understanding Tony.”

Yeah you’d hate to making a documentary about a guy and have to figure out how to navigate multiple POVs that may or may not fit in to the neat little package you wanted to make.That’d be hard. Almost like work.

For the documentary, there are numerous other ways to have used the quotes without this technique. But that being said, the fact that it’s at least his own quotes makes this a little easier to permit. So it’s not going to dissuade me from seeing the film.

I think getting an impersonator to read Lincoln’s letters for Ken Burns is one thing, and weaving in doctored recordings with real ones is a whole ‘nother. For one, you credit the impersonator and label reenactment footage.As technology makes it easier and easier to pass off falsehoods as truth, it’s probably good that we put our foot down on the amount of truthfulness/forthrightness we expect from a documentary. I’m not outraged, but blurring the line between “this is a recording of the deceased” and “this is a manufactured recording of the deceased” seems well worth nipping in the bud.

I don’t doubt most people would agree that it’s a bad idea to have technology that could replicate someone’s voice well enough to manufacture lies, but given the nature of technology, how possible is it to actually nip this in the bud? Despite the initial outcry, there’s no stopping anyone from using a 3D printer to make a gun these days. No amount of legislation or appeals to common decency would have prevented that from happening.I have a hard time believing that if this documentary didn’t do this (or even went so far as to say they considered it and ruled it out as a complete ethical monstrosity) it would stop the next generation of charlatans and crackpots from utilizing the technology to craft whatever lies they wish.

Whataboutism aside, I’m talking about the first thing you said: we just agree it’s a bad idea. You could stage events or use editing to de-or-recontextualize statements, but if folks find out about such ethical breaches, it usually sinks your documentary. It’s not a matter of regulating or controlling misuse; it’s a matter of not rewarding it.

Respectfully, I don’t agree with your characterization of my argument as whataboutism. For one thing, I think a necessary condition for whataboutism is that we be on opposite sides of an issue, more than likely partisan, in which one of us is attempting to undercut the other person’s moral standing to make such a claim. This was categorically not my intent. Unless you believe any analogy presented in an argument is automatically a tu quoque, I don’t think my claim that despite sound reasoning that it should not always do so, technology generally proceeds virtually unhindered (e.g.) is altogether that controversial.Anyway, my initial read on this issue is that this is not so big of a deal in this instance, but I find it an interesting ethical issue that I’m not done thinking about. I certainly don’t hold it against anyone that thinks it is unambiguously wrong. Tomorrow, I may agree with you.

Ah, I get it now. You just want to argue for the sake of arguing.

What do you argue for the sake of?

If the director was honest about how and when they were using this technique – a little subtitle saying “Voice Recreation” – or somesuch, I might marvel at the technical prowess that went into recreating Bourdain’s voice. But I can’t get behind this deliberate attempt to obfuscate what’s genuine and what’s fabricated in what’s supposed to be a documentary. As you suggest, let’s draw a line while we still can.

Sorry, but this is much worse than any Kanye shenanigans. Especially in light of Bourdain’s distaste for certain elements of his fame. The fact that this doc is from CNN adds another level of disbelief. Almost as disturbing is the fact that Bourdain jettisoned a decades-long working partnership with Zach Zamboni after he clashed with Argento. A large part of the footage in the doc was likely shot by Zamboni.

EDIT: Bourdain tossing Zamboni aside was pretty rank. But that was his call. I meant to say that skipping that incident throws the doc into an awkward hole.

Deepfake movies and AI voices, the future is phony.

I get that they’re “his words” in that it’s from his writing, but this still feels gross and wrong. Certainly won’t stop me from watching it. Just one more log tossed onto the raging fire of hypocrisy that gnaws at my soul.

I don’t have too big a problem with it if all they’re doing is having the AI read text Bourdain actually wrote and published. I do think the director needs to add a disclaimer to every scene that uses the AI so we can decide for ourselves if we want to believe it.This puts me in the position of having to trust the director didn’t grab a quote out of context or source it from something Bourdain may have written but didn’t want out in the world. There’s a big difference between an AI that sounds like him reading a passage from Kitchen Confidential and having it read something like a personal journal or notes in the margin of a manuscript that were never intended to be seen or heard by the public.

Yeah, it feels like the person who he was corresponding with could read the email and it would have just as much emotional impact.

Well, I guess we should just be grateful that they didn’t go full deepfake and have Bourdain mouthing the robot-generated text. The New Yorker piece doesn’t say whether or not there’s a disclaimer in the film noting the voice recreations — Neville’s remark that “you’re not going to know” suggests not. For me, that’s what crosses the ethical line. Even if the spoken material is totally innocuous, in the absence of a disclaimer the film is misleading the viewer by presenting it as if it’s actually Bourdain’s voice, and distorting our understanding of what he said. I mean, ultimately it’s almost certainly a very minor ethical breach, but I don’t think we should give it a pass. It opens the door to even creepier and more dishonest shenanigans. As a documentarian, you’d think Neville would know better.

I’m a documentary filmmaker, and this GROSSLY unethical. I make historical documentaries for a production company, and we diligently source our quotations and handle them with care, and when we edit interviews, we check against the transcripts to ensure nothing has been taken out of context. It’s shit like this that gives media a bad name and fuels the claims of right wingers that it’s fake or manipulated. I will not watch this documentary.

That’s weird, Neville has just released a very authentic sounding recording of you saying you will watch this documentary and think you’ll enjoy it very much.

For three specific moments in the film, Neville wanted to use Bourdain quotes that he didn’t say out loud but wrote in emails or whatever,Can’t help but notice a lot of the “outrage” below seems to be ignoring this bit.Is it unusual sure but it’s not the filmmaker inventing quotes. It’s still things Bourdain said, just not in words, and it’d be odd for the flow of a film that will no doubt rely on Bourdain’s voice to suddenly go quiet or get an impersonator in.

“It’s still things Bourdain said, just not in words,”Yeah, you’re going to have to explain how this kind of communication happens because it seems like you are reaching for a ‘metaphor’ but you don’t seem to know what that is, so….Just go away?

I think any manipulation of his voice is unsettling. That it’s words he wrote down is slightly less egregious but there are still ethical implications. Perhaps he wrote it because he couldn’t bear to say it out loud. Perhaps he never intended a wider audience to see it. Perhaps it’s taken out of context and he was saying something ironically.

I think an impersonator would be lame, but I think having the words — if they’re something appropriate for public consumption — being read by a willing friend, someone who knew him, etc, would have been totally acceptable and impactful without the ick factor.

It’s done all the time, but honest people put “actor’s portrayal” or whatever under it.Because they’re not liars, so they don’t want to lie to their audience.

The “…or whatever” part gives me pause. There might not be a recording of Bourdain saying a certain thing because he never intended anyone but the person he was communicating with to hear it.Another thing that becomes a concern is context and emphasis. Bourdain was a very nuanced writer and speaker. That was one of the more charming parts of his personna—he was far more eloquent than you’d ever expect a guy in his field of work to be. There was often meaning in what he said that went beyond just the words he was saying. No algorithm can emulate that.

Is it unusual sure but it’s not the filmmaker inventing quotes.

Nobody’s saying that the quotes were invented by the filmmaker. The outrage stems from the fact that the recordings were created using AI. If there’s no recording of Bourdain saying it, then sorry, you don’t get to use that recording in your movie. That’s how it works.

I hope the AI voice is used for his famous “fuck Baby Driver” quote.

I heard the movie ends like this:

I would be fine not hearing anything about Anthony Bourdain for years, honestly. I don’t really understand the cult of personality around him.

The issue with using AI to read his email is that a computer generated voice of that person might have a tone that may or may not be what he was implying with his written word. But since its his voice that tone sticks. Ken Burns and many other documentarians have already shown how powerful a person’s written words can be when read by someone else.

The ghost of Max Brod just said, “That’s crossing a line, bro.”